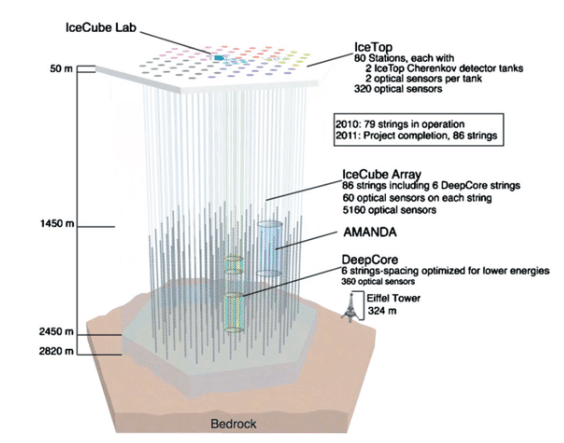

IceCube is the world’s premier facility to detect neutrinos with energies above approximately 10 GeV. It is a pillar of National Science Foundation’s Multi-Messenger Astrophysics (MMA) programme, one of the top 10 NSF priorities. The detector is located at the geographic South Pole and was completed at the end of 2010. It is designed to detect the interaction of neutrinos of astrophysical origin by instrumenting over a gigaton of polar ice with 5,160 optical sensors.

About

Similar to its predecessor, the Antarctic Muon And Neutrino Detector Array (AMANDA), IceCube consists of spherical optical sensors called Digital Optical Modules (DOMs), each with a photomultiplier tube (PMT) and a single-board data acquisition computer which sends digital data to the counting house on the surface above the array. DOMs are deployed on strings of 60 modules each at depths between 1,450 and 2,450 meters into holes melted in the ice using a hot water drill. IceCube is designed to look for point sources of neutrinos in the teraelectronvolt (TeV) range to explore the highest-energy astrophysical processes. The project is a recognized CERN experiment (RE10).

IceCube is a remarkably versatile instrument addressing multiple disciplines, including astrophysics, particle physics, and geophysical sciences. It operates continuously throughout the year and is simultaneously sensitive to the whole sky. The detector produces data that has been used for a wide array of cutting-edge analyses.

The IceCube collaboration has discovered extremely energetic neutrinos from the cosmos and initiated a joint neutrino-electromagnetic emission MMA observation which found the first confirmed high-energy astrophysical neutrino point source from the active galactic nucleus TXS 05060+056. This former discovery was recognized as the Physics World Breakthrough of the Year Award for 2013. The latter made IceCube one of the pillars on which the NSF is building an MMA program that has been declared a high-level priority area for the future.

In November 2013 it was announced that IceCube had detected 28 neutrinos that likely originated outside the Solar System.

The challenge

With the recent upgrade of the IceCube detectors, the computing demand for running and testing IceCube piloting activities will increase significantly, which will cause an even greater demand for GPUs. Currently the IceCube experiment can leverage on 300-500 GPUs for production and they would need about 1000 GPU (GTX1080 equivalent).

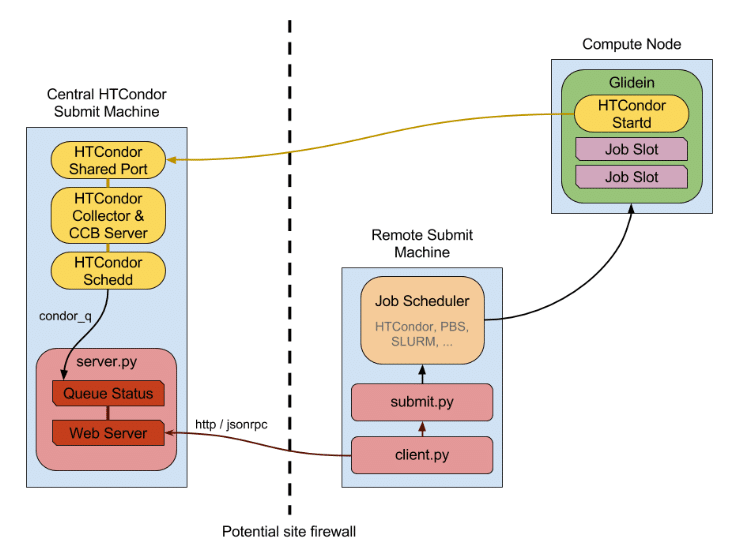

To support this new demand, the IceCube Neutrino Observatory used HTCondor to integrate all purchased GPUs into a single resource pool to which IceCube submitted their workflows from their home base in Wisconsin. This was accomplished by aggregating resources in each cloud region and then aggregating those aggregators into a single global pool at the San Diego Supercomputer Center (SDSC).

Architecture

The IceCube services are based on:

- A central HTCondor Submit Machine.

- A python server-client pair for submitting HTCondor glidein jobs on remote batch systems.

The full architecture is also completed by several clients on remote submit machines. The client will submit glideins which connect back to the central HTCondor machine and advertise slots for jobs to run in. Jobs then run as normal.

The Solution

Two resource providers of the EGI Federation are contributing to this resource pool providing additional GPU resources (e.g.: NVIDIA 1080Ti, NVIDIA T4). Starting from August 2021, IceCube signed a Collaboration Agreement with two EGI resource centres allowing the scientific community to extend the initial pool of GPU resources for running and testing IceCube piloting activities.

This Collaboration Agreement guarantees to the IceCube Neutrino Observatory to access to the following GPU cards:

- VM with 2 GPUs, 64 CPU cores, 512 GB RAM (6 nodes).

- VM with 10 GPUs, single root PCI, 12 CPU cores, 186 GB RAM, and

- VM with 8 GPUs (NVIDIA 1080Ti, NVIDIA T4), 300 GB RAM, 95 CPU cores.

These extra resources will be federated in a Central HTCondor Submit Machine.

- The EGI computing and storage resources such as the EGI Cloud Compute and the EGI Online Storage to distribute the computations.

- User registration and authentication mechanisms connected to EGI Check-In.

- Technical support to access and use the accelerated computing of EGI.

Impact

registered in the EGI Operations Portal.

consumed over the last two years

consumed over the last two years